|

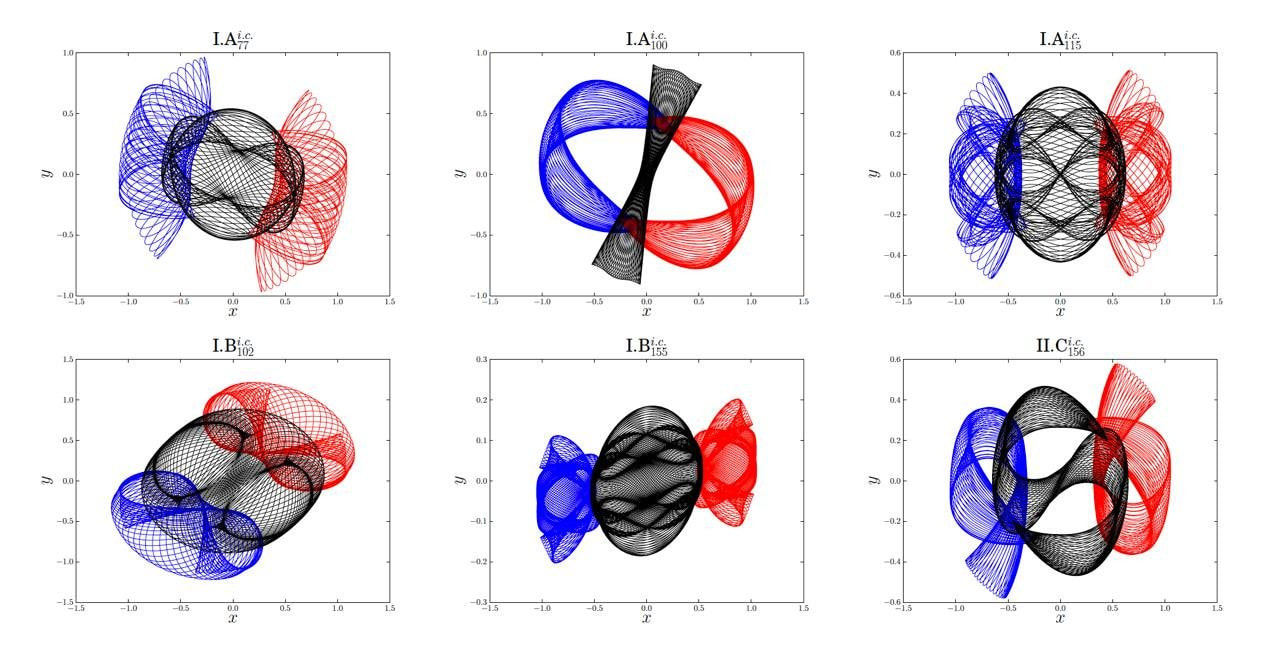

Amir Sariri Rotman School of Management, University of Toronto Roger Melko Perimeter Institute for Theoretical Physics Department of Physics and Astronomy, University of Waterloo Peter Wittek Creative Destruction Lab, University of Toronto In August 2017, I participated in the 34th International Conference on Machine Learning, one of the world’s most important academic events in the field of machine learning. I am going to guess I was the only economist-in-training who took a twenty-two-hour trip from Toronto to Sydney to participate in a conference that, at first glance, could not be more irrelevant to economics. But as a researcher interested in micro and macroeconomic implications of the dramatic progress in machine learning research and application, I had my own reasons to make this effort. When I attended the conference, it was only a few months after we had launched the world’s first entrepreneurship program for quantum machine learning startups, and only a couple of weeks before the program officially started in September. So, as Australian Federal Minister of Industry was giving his welcome message to conference participants, I wondered what would happen if I were to go up onto that stage in this enormous theatre full of machine learning scientists, shout, “Quantum Machine Learning,” then freeze time and then read and record everyone’s mind! The most frequent thought I’d see in the audience’s heads is probably something along the lines of “crazy guy!” But apart from that, would at least some of them be intrigued? Or would they ask,”What can this QML of yours do that our classical machine learning can’t?” As it turns out, this is the big question that everyone is asking. Given the newness of this frontier of science, there have been very few people who have found real applications where quantum machine learning significantly outperforms classical machine learning. Nevertheless, here I set out to present an application area in which quantum machine learning can in fact outperform classical machine learning. In an earlier blogpost, Peter Wittek and I provided a high-level idea about leveraging quantum computers in enhanced machine learning. We also touched on some of the major challenges and opportunities ahead for scientific and technological progress in QML. In order to present an application area in which QML outperforms machine learning, Peter and I teamed up with Roger Melko to dive deeper into one of the application areas in which QML can produce staggering improvements in results over its classical counterpart. Roger is a quantum many-body physics professor at the University of Waterloo and affiliates with the Perimeter Institute and the Institute for Quantum Computing. He is a Chief Scientist at the Creative Destruction Lab and engages in various capacities with the participants of CDL’s program to help them build scalable QML technologies that solve difficult commercial problems left unsolved by our current supercomputers. The case we would like to explore here is the application of QML in the design of chemical compounds. However, in order to fully understand the magnitude of the technological and commercial value of QML in this particular case, it is critical to have an overview of the field of quantum chemistry and the concept of the many-body problem in physics. Assemblies of electrons, nuclei, magnetic moments, atoms or qubits, such as those encountered in molecules, materials, or quantum computers, are fundamentally complex. This means that understanding the behavior that arises from the microscopic equations of motion is typically intractable, even for numerical simulations performed on massive supercomputers. Manifestations of this complexity are encountered every day by scientists and engineers working on understanding novel matter, materials, devices, and molecules. It is the fundamental roadblock to many areas of progress in science and technology, such as building ultra strong, lightweight materials, superconductors for advanced energy applications, or novel substrates for classical and quantum computing. An everyday manifestation of such complexity is in drug discovery, where investigators routinely have difficulty in predicting the behavior of chemical compounds, like those used in anticancer drugs. In each of these applications, there are so many in-motion particles of so many complex compounds that modeling how each of these particles interact and affect each other is essentially impossible. This complexity has its origins in the size of the quantum state space, which grows exponentially with the number of particles. Such exponential growth is reminiscent of the “curse of dimensionality,” a fundamental problem encountered in many machine learning tasks. A main goal of quantum chemistry is to predict the structure, stability, and reactivity of molecules. In principle, this requires solving the Schrödinger equation for the many-electron problem. Think of the Schrödinger equation as the only lock that, if you open it, you can predict all the chemical properties of a compound. The exact solution to this equation is very difficult to obtain. In fact, the Schrödinger equation has only been solved for very simple quantum objects, such as the hydrogen atom. In cases involving more particles (“bodies”) than just a proton and an electron, the solution must be approximated - this is the many-body problem in quantum physics. A manifestation of the classical equivalent to the many-body problem is the three-body problem, for example the one involving the moon, the earth, and the sun. The motion for this system of celestial objects does not have an analytical solution - that is, we cannot on paper solve all the ways in which these three masses affect each other. Just recently, two scientists, XiaoMing Li and ShiJun Liao at Shanghai Jiaotong University, China, have determined roughly 700 new families of periodic orbits for such three-body systems. Back to the quantum case: Calculations for approximating the Schrödinger equation are routinely done in chemistry departments at universities around the world, for a broad range of molecular systems. Typically, various approximation schemes help circumvent the “curse of dimensionality.” However, when one requires a detailed and accurate picture of how all of the electrons interact with each other in a molecule, standard approximations may break down. Therefore, in many important cases, chemists remain in the dark. In order to perform accurate molecular electronic structure calculations, the essential quantum complexity of the many-body electron problem must be tackled. If successful, one would be able to predict the outcome of many chemical reactions, the characteristics of novel drug candidates, the properties of new materials, and even the structures and behaviour of the building blocks of life such as proteins, sugars, and DNA.

This leads us to the question, “What kind of computer are we going to use to simulate physics?” This is the question Richard Feynman asked in his lecture on quantum computing in 1981. He proposed the idea that with a suitable class of quantum computers one could imitate any quantum system, including the ones seen in chemistry. Feynman wondered whether quantum physics could be simulated by a universal computer - that is, a computer that can perfectly simulate nature. He was specifically encouraging people who are trying to build computer simulations of the world to think about quantum mechanics and look at computers from a different point of view. Seth Lloyd, a physics professor at MIT and a Chief Scientist at the Creative Destruction Lab, was the first scientist to confirm that exponential speedups for simulations using quantum computers are possible. Lloyd is also a pioneer of the idea of quantum-enhanced machine learning. An interesting challenge here is that problems such as those in many-body physics are so complex that we must resort to gross simplifications in order to run simulations on classical computers. In the process, we lose properties that affect both the quantitative and the qualitative aspects of the simulation. Just recently, though, a group of researchers led by ETH professors Markus Reiher and Matthias Troyer showed that in fact a quantum computer with a number of qubits that is within reach for early quantum devices can calculate the reaction mechanism of a complex chemical reaction in a few days. The researchers chose an enzyme called nitrogenase, which enables certain microorganisms to split atmospheric nitrogen molecules. The reaction is so complex that it is nearly impossible to know exactly how it works and it remains as one of the greatest unsolved mysteries in chemistry. The last missing piece of the puzzle is what learning algorithms bring to the table. Reinforcement learning is a form of machine learning related to optimal control theory: it allows a learning algorithm to control some system under consideration without having an explicit model of the system. A paradigmatic example is playing Atari games: just by looking at the screen and poking the controls, a reinforcement learning agent can achieve superhuman performance. When it comes to quantum systems, reinforcement learning forms a transition point between the classical and the quantum regimes. To begin with, we can use reinforcement learning for classical learning and for control over quantum systems. For instance, we can use a learning algorithm to adapt to external noise in quantum computing or to compensate for decoherence, but also for estimating parameters in an unknown quantum process. Taking this idea further, we can quantize reinforcement learning. Quantum-enhanced reinforcement learning algorithms for large-scale quantum information processing systems are likely to be a major application area of quantum machine learning. Going back to our earlier example of drug discovery, we can run a quantum simulation of the chemical compounds in question and apply a quantum reinforcement algorithm to drive the search towards a particular goal. Integrating quantum simulations and quantum machine learning can take quantum many-body physics and quantum chemistry to the next level, where a quantized reinforcement learning algorithm would drive the discovery of new materials through their quantum mechanical properties. In summary, many important problems in quantum many-body physics, from materials design to drug discovery, suffer from an exponential “curse of dimensionality,” which makes them intractable to standard computational methods. Will machine learning be able to lift this curse? As pointed out by Feynman and others, some of such intractability can be overcome with quantum hardware, the likes of which we can almost see on the horizon today. What new discoveries will this hardware bring to the quantum many-body problem? Finally, will the combination of quantum hardware and machine learning - i.e., “quantum machine learning” - provide new, unprecedented power to tackle the Schrodinger equation? Perhaps some fledgeling startup team at CDL will be the first to find out.

2 Comments

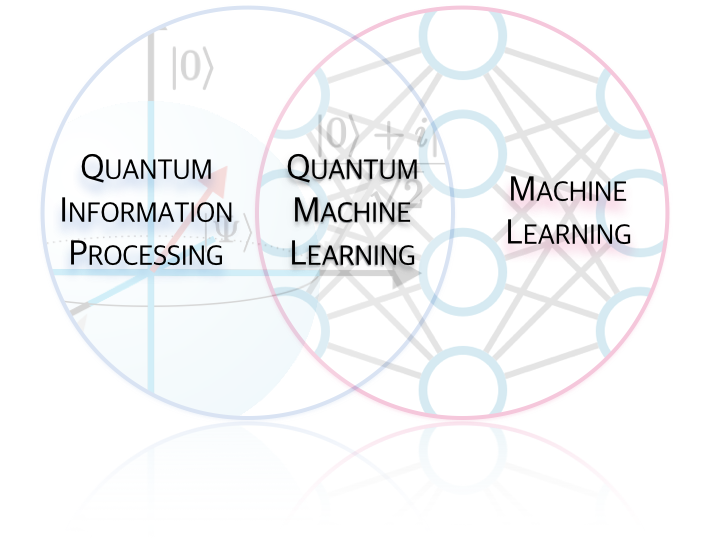

Amir Sariri Rotman School of Management, University of Toronto Peter Wittek ICFO-Institut de Ciencies Fotoniques, The Barcelona Institute of Science and Technology Just five months after I had started working with my doctoral supervisor on an economic research project on the labour market for AI scientists, I came across a term that seemed intellectually “cool,”, but about whose scientific or technological meaning I was clueless - Quantum Machine Learning (QML). As a by-product of documenting research in artificial intelligence for several months, I had learned about various streams of AI research, especially Machine Learning. While trying to narrow my research questions, I could not resist thinking about how quantum machine learning is different from its classical version. Having Peter Wittek, a mathematician and QML researcher as a Chief Scientist of the Creative Destruction Lab (where I am a research fellow) made me think it would have been a shame if I did not collaborate with him to write an article on the latest advances in QML research. We sat together and decided to write this article with some light technical detail for those interested in this area as a future field of research or as a source of advanced technologies for startup ideas. In this article, we avoid unpacking quantum physics phenomena such as superposition and entanglement that are foundational to understanding how quantum computers work. Instead, we aim to describe the current state of research in quantum machine learning. We will explain some of the challenges researchers face in the quantum computing arena and briefly review some of the viable solutions to tackle those challenges. In some cases, we will also recount examples of hardware and platform developments by the industry that are relevant to these challenges. The transformative role of computer science has long been documented as the precursor to much of the scientific progress and economic growth in the modern world. We have come a long way since Alan Turing’s contributions to computer science and cryptanalysis in 1936, which laid the foundation of modern computers. However, scientists’ imagination has always been bounded by computational power: the speed and efficiency at which a number is calculated, or a problem is solved. Ideas for more efficient ways of solving scientific problems have been ahead of the state-of-the-art in computer technology. The three-billion-dollar Human Genome Project to determine the DNA sequence of the entire human genome provides a tangible example. Although the project was declared complete in 2003, scientists only had the faintest glimmer of what their information was really telling them, especially because they were immersed with large volumes of data that could take years to process and analyze. Diseases such as cancer each may have millions of DNA rearrangements compared to a normal cell, and even today we cannot fully read these rearrangements and process our readings fast and efficiently. A major barrier to sequencing more personal human genomes and analyzing this data has been computational power. The cost of data storage and efficiency of algorithms that processed data play a role, but computer speed has been the bottleneck. Demand for higher computational power has always exceeded the supply. Gordon Moore’s 1965 observation was that the number of transistors in each square inch of computer chips doubled approximately every 18 months; he predicted the doubling of computational speed every two years. The slope of progress in many fields of science that rely on computers, including genetics in the example above, has been characterized by Moore’s observation. Skeptics accurately predicted that this trend would be too optimistic in the long run. Physicists, too, told us how the laws of nature will put an end to this trend, since transistors cannot get smaller than the size of atoms. The demand today is, again, solving problems that cannot be efficiently solved by classical computers, which are becoming very hard to improve in terms of speed and efficiency. Hope was found three decades ago in quantum physics: computers that exploit quantum effects to compute certain problems faster. The idea is to imagine solving problems that are effectively impossible to solve with classical computers or that would take as long as a few hundred years on a modern supercomputer. An efficiency gain of this magnitude would be hard to dismiss, and a vast number of scientists, corporate research labs and government initiatives from around the world have focused their attention on processing information by taking advantage of quantum phenomena. At the same time, we’ve observed major advances in machine learning techniques and a rapidly growing number of scientists who have focused on taking advantage of quantum effects to enhance machine learning. Quantum machine learning is the intersection of quantum information processing and machine learning. As a conceptual framework, think of it as a Venn diagram, where we have machine learning as one circle, quantum information processing as another, and the intersection of the two defines quantum machine learning (see “Quantum Machine Learning”, by Biamonte, Wittek, Pancotti, Robentrost, Wiebe and Lloyd). The scale of today’s information processing requirements pushes us to depart from laws of classical physics and resort to quantum physics to help us store, process, and learn from this data. One way to understand scientific work on quantum machine learning is to think of it as a two-way street. On the one hand, machine learning helps physicists harness and control quantum effects and phenomena in laboratories in order to better understand quantum systems and their behavior. On the other hand, quantum physics enhances performance of machine learning algorithms that are difficult to use with classical computers. The focus of this article is on the latter: quantum enhanced machine learning. The value proposition is clear; quantum versions of machine learning can learn from data faster and with higher precision, producing more accurate predictions. This quantum version of ML algorithms, however, happens to be harder to implement than it sounds.

First, due to the peculiar nature of how a quantum computer works, it is challenging to input classical data such as financial transactions or pictures. One way to tackle this problem is to use “quantum data”; it is much easier to deal with data that is already quantum, such as information about the inner working of a quantum computer or data that is produced by quantum sensors (i.e. sensors that produce data in quantum state). Another way is to perform what is called a quantum state preparation of the classical data. One way that scientists can do this in laboratories is to use polarization of photons in order to encode zeros and ones of classical data into physical degrees of freedom required for quantum processing of the data. The second challenge is quantum computers do not speak the languages that most programmers are familiar with (e.g. Python, C#, Javascript). In fact, programming quantum computers for efficient processing of data turns out to be more complicated than simply developing a new programming language. Nevertheless, basic quantum programming languages are evolving; Microsoft’s LIQUi|> (read “liquid”) is one of them, developed by Quantum Architectures and Computation Group at Microsoft Research. The full platform is available on GitHub. IBM Q, an IBM initiative to build commercial quantum computers, has its own graphical language platform called Quantum Experience whereby users can run algorithms on IBM’s quantum processor and work with individual qubits. We have also made improvements on computing paradigms. For instance, D-Wave’s quantum annealer is not a universal quantum computer, which means it cannot run any of the mentioned quantum programming languages, yet the hardware is useful for learning from data faster than with classical computers. Finally, after researchers have successfully fed quantum machines with data that it can read, and also written and implemented algorithms to process their data, they still have a problem: it is often hard to read the results accurately. The result of quantum operations is a quantum state, which can be thought of as a special probability distribution. Obtaining classical information about the quantum state requires “measurement”. To fully characterize a quantum system (i.e. obtain the results), a researcher would have to repeat the measurement (and quite possibly all computations that led to the state), just like when she is sampling an unknown probability distribution. They need to do this repetitive task in order to understand what the quantum system did to the data, and to be able to have a classical interpretation of the results. These challenges will require substantive work as QML transfers from research laboratories and into science-based startups. Going back to my research on the trajectory of artificial intelligence from an ignored idea in 1970s to one of the most exciting sources of scientific research and core technology of startups today, QML has the potential to bring about the next wave of technological shock and create a step function reduction in the cost of prediction (see “The Simple Economics of Machine Learning”, by Ajay Agrawal, Joshua Gans and Avi Goldfarb). Both machine learning and quantum information processing are rapidly growing and have their own uncertainties. Quantum machine learning is a space where these two strands of research act as complements of each other and every step forward in one is an opportunity of improvement in the other. Some of the complexities and challenges stated above are easier to manage when the goal is to build intelligent systems that learn from data. In addition, machine learning may itself enable scaling up quantum information processing, which can eventually lead to building scalable quantum computers. While collecting and storing vast amounts of data are becoming so cheap that it is no longer a concern, machine learning community is coming up with faster and more efficient methods and techniques to learn from this data. What determines how fast we capitalize on our data and models is computational power, and quantum machine learning is showing the much promise needed in order to be our best bet yet for building both intelligent systems and quantum computers. We are relaunching this website to make it an entry point to anybody interested in quantum-enhanced machine learning and AI, or in applications of classical learning protocols in quantum physics tasks. After two highly successful quantum machine learning meetings in South Africa, a workshop and a summer school, we are convinced that there is in fact a community and it is worth having a gateway to access it. At the moment, the main people behind the website are Maria Schuld, Peter Wittek, Francesco Petruccione, and Ilya Sinayskiy, but eventually we would like to make contributions easy and open to anybody in the community.

How can you get involved?

We welcome all suggestions to improve this website and help organize the community better. |

Archives |

RSS Feed

RSS Feed